|

| Our procedures are a bit more involved than this... |

Tuesday, February 25, 2014

If it's not graded, I won't do it

One task all faculty at GVSU are asked to do every February is prepare an annual Faculty Activity Report (FAR). This entails compiling a complete list of our efforts for the past calendar year in the areas of teaching, scholarship, and service.

One piece of our FAR includes a reflection on trends we have noticed in our student evaluations of instruction. A colleague who had read my teaching reflection invited me to share the following in the hope that others seeking to make sense of and respond to student evaluations of instruction might find it useful.

Crowd-sourcing Our Midterm Review

I had to take a sick day today, so we are reviewing for our Mth323 midterm exam by crowd-sourcing a review guide. Students have been asked to add at least two tips for our learning targets, plus as many questions as they have. Check it out (updated every 5 minutes or so):

Sunday, February 23, 2014

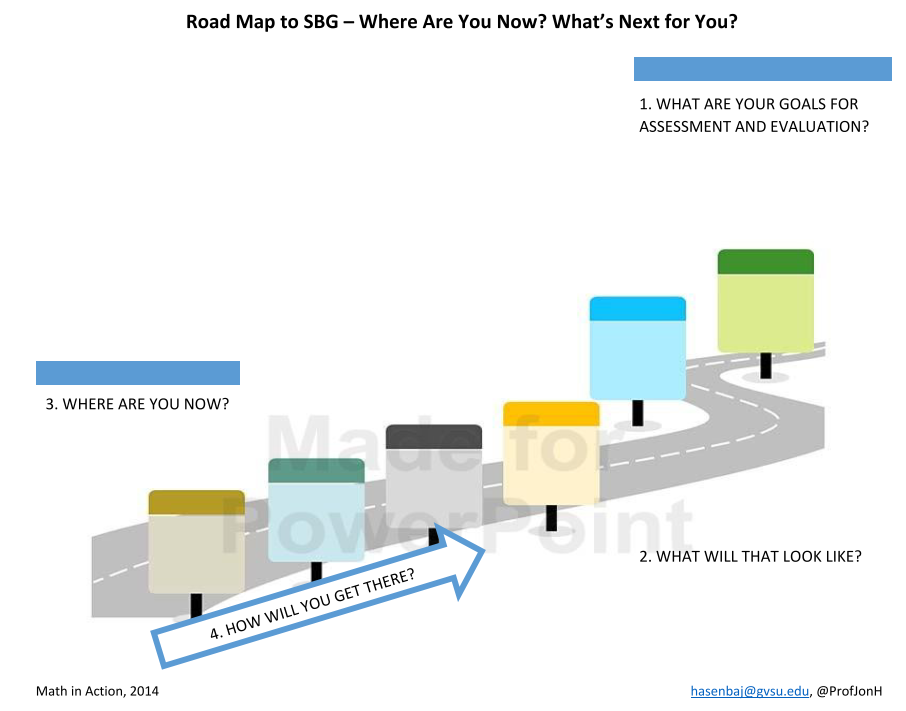

SBG at Math in Action (2014)

Here are the slides from my talk at the 2014 GVSU Math in Action Conference.

SBG has grown too big for this post! To access my growing collection of learning targets, presentations, and links to a variety of SBG resources, please visit the new Standards Based Grading page.

SBG has grown too big for this post! To access my growing collection of learning targets, presentations, and links to a variety of SBG resources, please visit the new Standards Based Grading page.

Tuesday, February 11, 2014

A Question of Balance

This post is based on a student comment that caught my eye while grading. The data below shows the sodium content (in mg) for 23 brands of "regular" Peanut Butter.

The student wrote one of the following two statements in defense of choosing the preferred measure of center.

1) The data are balanced on each side of the median.

2) The data are balanced on each side of the mean.

Which statement do you think is more correct? In what sense might someone think the other one is correct, too?

Ready... GO!!

The student wrote one of the following two statements in defense of choosing the preferred measure of center.

1) The data are balanced on each side of the median.

2) The data are balanced on each side of the mean.

Which statement do you think is more correct? In what sense might someone think the other one is correct, too?

Ready... GO!!

Monday, February 10, 2014

Why Precision Matters

The following home workshop was used with preservice teachers in an effort to highlight the importance of attending to precision when doing mathematics investigations.

Wednesday, February 5, 2014

How might you improve?

How convincing is this? How might you do better?

These questions were bothering me. I had just completed a paper with colleagues Lenore Kinne and Dave Coffey on the effective use of rubrics for formative assessment (in press). In it, we wrote:

For that, I had to write comments on students' papers. I realized I was writing the same sort of comments over and over. I needed to do something about that.

These questions were bothering me. I had just completed a paper with colleagues Lenore Kinne and Dave Coffey on the effective use of rubrics for formative assessment (in press). In it, we wrote:

...a good rubric provides feedback on the progress that has been made toward the goal while simultaneously communicating ways the performance might be improved (emphasis added).My rubric did the first part, but it bothered me that it didn't do the second part.

For that, I had to write comments on students' papers. I realized I was writing the same sort of comments over and over. I needed to do something about that.

Those Less Convincing Performances

I recently completed my second semester of standards-based grading. I have learned a lot, and will try to post more about that this semester.

After a conversation with a colleague, Pam Wells, about the SBG system I'd been using. The conversation got me thinking about how to handle the, shall we say, less convincing performances.

After a conversation with a colleague, Pam Wells, about the SBG system I'd been using. The conversation got me thinking about how to handle the, shall we say, less convincing performances.

Email to Pam on the matter:

After a conversation with a colleague, Pam Wells, about the SBG system I'd been using. The conversation got me thinking about how to handle the, shall we say, less convincing performances.

After a conversation with a colleague, Pam Wells, about the SBG system I'd been using. The conversation got me thinking about how to handle the, shall we say, less convincing performances. Email to Pam on the matter:

Pam, I appreciated the opportunity to talk with you about SBG on Friday. Since then, my thought keep returning to the rubric and the question of what a “1” means. I think I will change how I use 1’s in the future.When we talked, we agreed that 1 basically means “you don’t get this yet.” In that sense, a 1 should not count as evidence of proficiency at all. So a 1 and an 0 are similar: they mean “no evidence provided”, but for different reasons. Anything at a 0 or 1 simply does not count as a piece of evidence for that target. If we require two pieces of evidence for each target, this reinforces the mastery mode of grading implicit in SBG. If you don’t get to two pieces of evidence, the grade is reduced.

Subscribe to:

Posts (Atom)